This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Big Data in the Life Sciences

Next-generation imaging technologies are producing a wealth of rich data to advance Life Sciences research. Artificial intelligence and machine learning can help researchers quickly derive insights from such data. However, many organizations do not have the compute and storage infrastructure to handle the combination of enormous imaging data volumes and demanding analysis workloads.

The reason: Installed infrastructures were designed to handle the requirements of next-generation sequencing (NGS), which was released into the market over a decade ago. While infrastructure deployments supporting NGS were large and certainly expensive, they did not necessarily require the most advanced computing technologies. Despite some successes in leveraging GPUs, CPU extensions, and FPGAs, these technologies never gained much traction in NGS analysis. Traditional genomics pipeline needs were met with commodity CPUs and mid-range performance file systems.

While the challenges associated with sequence analysis are well understood and addressable, new technologies and advancements in existing instruments promise to upend the way research IT builds its storage infrastructure.

Imaging Technologies Contributing to Life Science Data Growth

Though many imaging technologies have existed for years or even decades, a few are experiencing rapid growth and development, with an associated increase in storage demands. Specifically, technologies like Cryogenic Electron Microscopy (CryoEM) and Lattice Light Sheet Microscopy are having major impacts on research and human health. Such technologies are challenging how storage is implemented and used in a number of ways.

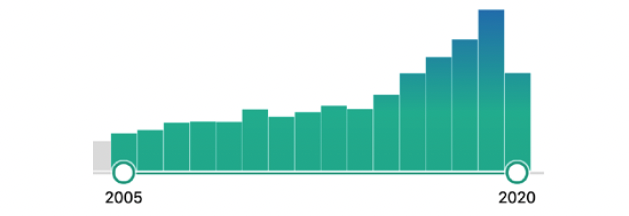

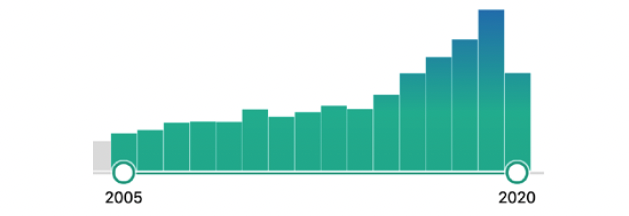

Growth of CryoEM publications over the past 15 years. Note the rapid increase in number of publications in the past 6 years, coinciding with improvements in CryoEM technology.

Image Source: PubMed

Cryogenic Electron Microscopy (CryoEM)

CryoEM is a technique that uses electron microscopy to image cryogenically frozen molecules. Unlike a common older molecular determination technique, X-ray crystallography, the primary advantage of CryoEM is the ability to use molecular samples that are not crystallized. Many molecules cannot be crystallized, or have their structures changed by the crystallization process. Historically, though CryoEM can be used for molecules in a more native state, the imaging resolution was too poor to gain scientific insights at the level of X-ray structures. Recent advancements in CryoEM, however, are now increasing the scientific interest and applicability of the technique.

While CryoEM has been in use since the 1980s, improvements in detector technology and software have led to massive growth in the past five years. With these changes, the resolution has improved immensely and increased the utility of the results. However, this improvement has come with associated larger data sizes and increased storage and processing demands.

Current microscopes can generate TBs of data per day, and that amount continues to grow. Furthermore, many organizations are deploying multiple devices, so it is not unreasonable to expect multiple petabytes of data generated per year at a single organization from just CryoEM.

Organizations that are deploying CryoEM instruments face the challenge of rapidly advancing technology, as well as adding more devices. From a compute and storage standpoint, a parallel file system that can grow with the infrastructure is required both for capacity and analysis. Additionally, software for CryoEM analysis is multithreaded and can take advantage of both increasing core counts and well as GPUs. Intelligently compiling the software for scale with multicore CPUs, and GPUs for acceleration, is key to running the analysis as fast as data is generated.

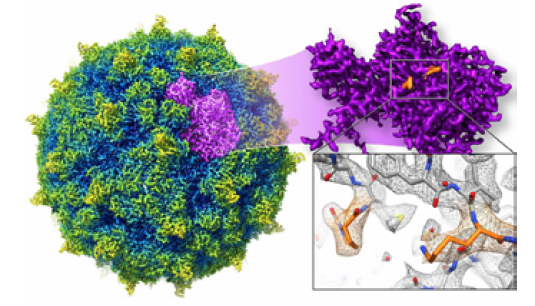

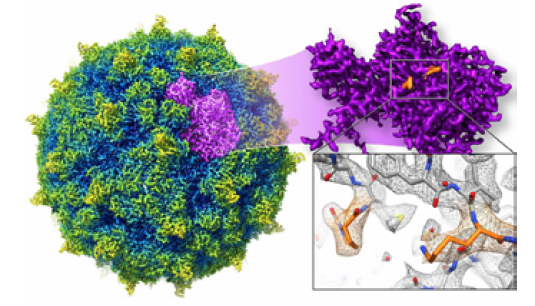

Rhinovirus atomic structure as determined by CryoEM. Structures of this resolution are now produced by CryoEM at a greater rate than ever before.

Image Source: PNAS

Lattice Light Sheet Microscopy (LLS)

Lattice Light Sheet Microscopy, developed by Nobel Prize Winner Eric Betzig, is a relatively new technique, having been developed only in the past five years. It allows long timescale imaging of dynamic biological processes in 3D. It is unique among microscopy techniques in that there is little damage to the sample, meaning living organisms can be imaged in real time. With other technologies, the sample is damaged, and long videos cannot be recorded.

LLS works by using thin sheets of light to illuminate the sample, which can be living tissue, cells, or organisms. The technique is less damaging to live samples and enables the longer acquisition time (hours instead of seconds or minutes). The use of the sheet of light enables faster data acquisition as compared to more traditional methods such as confocal microscopy. By operating at a higher acquisition rate, researchers can visualize previously unseen biological processes in three dimensions.

Like CryoEM, LLS generates TBs of data per day from each instrument. Currently, data output is comparable to or exceeds that of CryoEM, and it can be expected to grow as the technology improves, and more organizations deploy microscopes. Lattice Light Sheet requires GPUs and high-performance storage to analyze the datasets in a timely manner. Like the other techniques mentioned, the improvement in scientific resolution, in this case, length of time and data acquisition rate, contributes to the massive data output sizes.

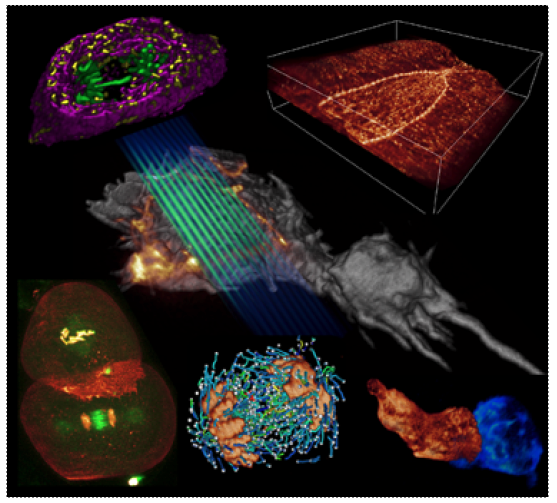

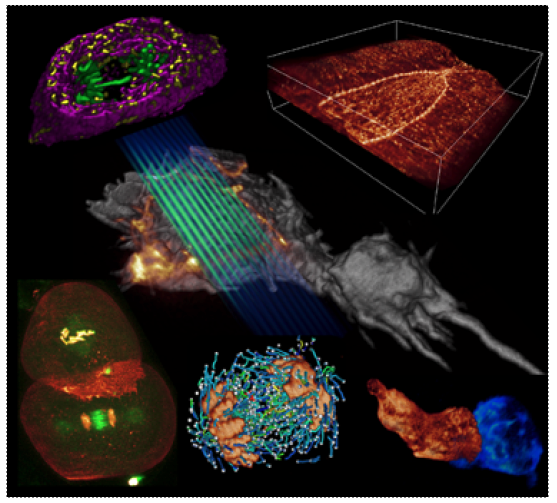

6 Lattice light-sheet examples. As light planes move through the specimen, 3D images are generated.

Image Source: CBMF Harvard Medical School

Infrastructure Considerations for Future Image Analysis

The infrastructure designs currently implemented for NGS will not meet image analysis needs and mixed workload pipelines. Storage is central to image analysis workflows, and it must have the capacity to meet the data volumes, and the performance to meet changing workloads. Previously, large amounts of medium performance storage and CPU-only nodes connected at 1G or 10G supplied adequate performance for genomics pipelines. With the growth of image generating devices, storage and compute requirements are far more demanding. Multicore nodes, as well as GPU nodes, are required. Existing 10G networking will not meet the bandwidth needed to get these larger datasets to GPUs or multicore servers. Ideally, an image analysis-ready infrastructure will need high-capacity, high-performance storage, and GPU nodes connected to storage at 25G or better.

Demands on infrastructure will grow with scientific output. Research IT staff will need to spend more time supporting researchers and deploying experimental technologies than ever before. Storage, central to image analysis workflows, must be stable and reliable in addition to high-performance. Furthermore, research IT staff will increasingly have less time to fight fires or get involved in complicated configurations of systems, because their skills will be needed to support researchers in a greater capacity. The growing sophistication of research pipelines will require increased contributions from IT staff, and IT will transition from their infrastructure support role to a partner in accelerating discovery.

Artificial Intelligence and Machine Learning in Image Analysis

Another factor driving infrastructure demands is the growing use of artificial intelligence (AI) and machine learning (ML) for image analysis.

The rate at which images are generated far exceeds the ability for humans to analyze manually. Great potential exists for AI/ML to accelerate research in image analysis. However, two main challenges exist.

- Image files, especially legacy data, are spread across disparate storage platforms with limited or no file management in place. Naming conventions and standardized directory structures rarely exist within organizations, and almost never exist externally.

- Some research areas, such as CryoEM, are only now generating enough quality datasets to use for training models. Previously, there simply was not sufficient data to enable algorithm development. The growth in datasets has produced some early wins (e.g., in the particle picking step of the processing pipeline) that have resulted in software performing similar human manual analysis.

Unfortunately, until major progress can be made solving the data management challenges surrounding Life Sciences image files, large-scale breakthroughs will be few and far between. Organizations that immediately start managing their data as they generate it will be much better positioned to take advantage of AI/ML. As this data is created, it is important to have an AI/ML-ready infrastructure is in place to handle the analysis. What’s needed is a high-performance infrastructure with a parallel file system that can saturate GPUs to efficiently and quickly run image-related Life Sciences AI/ML workflows.

COVID-19 and CryoEM

It is easy to get caught up in the technical details of emerging technologies. Often, the research that accompanies these techniques can appear to be esoteric with no practical application. However, as an example, CryoEM has had major success in helping the global effort against COVID-19. In March of 2020, researchers were able to use CryoEM to visualize the structure of the 2019-nCoV trimeric spike glycoprotein. The structure was rapidly determined in a biologically-relevant state at 3.5 Angstroms of resolution, which is comparable to X-ray crystallography techniques.

This protein structure of the virus is a key target for vaccines, drugs, antibodies, diagnostics, and for contributing to our understanding of infection. Now that this structure has been determined, it can be used to guide therapeutic efforts going forward, as referenced in an article titled Cryo-EM structure of the 2019-nCoV spike in the prefusion conformation in the March 2020 issue of Science.

The Way Forward for Image Analysis

The IT infrastructure designs used for the past ten years to meet NGS needs will not meet the current and future analysis requirements of image analysis in the Life Sciences. Organizations that were used to genomics-only workloads will soon be challenged with analysis related to more recently adopted imaging technologies and the resulting increases in data sizes and volumes. These mixed workloads will stress storage infrastructure in unforeseen ways. As organizations plan for the changing landscape of research pipelines, they must ensure a data storage foundation that delivers high performance in a reliable, scalable and adaptable way. In doing so, IT staff can free themselves from the administrative burden of storage systems and move towards becoming a partner in scientific discovery.

- Big Data in the Life Sciences

- Imaging Technologies Contributing to Life Science Data Growth

- Cryogenic Electron Microscopy (CryoEM)

- Lattice Light Sheet Microscopy (LLS)

- Infrastructure Considerations for Future Image Analysis

- Artificial Intelligence and Machine Learning in Image Analysis

- COVID-19 and CryoEM

- The Way Forward for Image Analysis

-

Big Data in the Life Sciences

Big Data in the Life Sciences

Next-generation imaging technologies are producing a wealth of rich data to advance Life Sciences research. Artificial intelligence and machine learning can help researchers quickly derive insights from such data. However, many organizations do not have the compute and storage infrastructure to handle the combination of enormous imaging data volumes and demanding analysis workloads.

The reason: Installed infrastructures were designed to handle the requirements of next-generation sequencing (NGS), which was released into the market over a decade ago. While infrastructure deployments supporting NGS were large and certainly expensive, they did not necessarily require the most advanced computing technologies. Despite some successes in leveraging GPUs, CPU extensions, and FPGAs, these technologies never gained much traction in NGS analysis. Traditional genomics pipeline needs were met with commodity CPUs and mid-range performance file systems.

While the challenges associated with sequence analysis are well understood and addressable, new technologies and advancements in existing instruments promise to upend the way research IT builds its storage infrastructure.

-

Imaging Technologies Contributing to Life Science Data Growth

Imaging Technologies Contributing to Life Science Data Growth

Though many imaging technologies have existed for years or even decades, a few are experiencing rapid growth and development, with an associated increase in storage demands. Specifically, technologies like Cryogenic Electron Microscopy (CryoEM) and Lattice Light Sheet Microscopy are having major impacts on research and human health. Such technologies are challenging how storage is implemented and used in a number of ways.

Growth of CryoEM publications over the past 15 years. Note the rapid increase in number of publications in the past 6 years, coinciding with improvements in CryoEM technology.

Image Source: PubMed -

Cryogenic Electron Microscopy (CryoEM)

Cryogenic Electron Microscopy (CryoEM)

CryoEM is a technique that uses electron microscopy to image cryogenically frozen molecules. Unlike a common older molecular determination technique, X-ray crystallography, the primary advantage of CryoEM is the ability to use molecular samples that are not crystallized. Many molecules cannot be crystallized, or have their structures changed by the crystallization process. Historically, though CryoEM can be used for molecules in a more native state, the imaging resolution was too poor to gain scientific insights at the level of X-ray structures. Recent advancements in CryoEM, however, are now increasing the scientific interest and applicability of the technique.

While CryoEM has been in use since the 1980s, improvements in detector technology and software have led to massive growth in the past five years. With these changes, the resolution has improved immensely and increased the utility of the results. However, this improvement has come with associated larger data sizes and increased storage and processing demands.

Current microscopes can generate TBs of data per day, and that amount continues to grow. Furthermore, many organizations are deploying multiple devices, so it is not unreasonable to expect multiple petabytes of data generated per year at a single organization from just CryoEM.Organizations that are deploying CryoEM instruments face the challenge of rapidly advancing technology, as well as adding more devices. From a compute and storage standpoint, a parallel file system that can grow with the infrastructure is required both for capacity and analysis. Additionally, software for CryoEM analysis is multithreaded and can take advantage of both increasing core counts and well as GPUs. Intelligently compiling the software for scale with multicore CPUs, and GPUs for acceleration, is key to running the analysis as fast as data is generated.

Rhinovirus atomic structure as determined by CryoEM. Structures of this resolution are now produced by CryoEM at a greater rate than ever before.

Image Source: PNAS -

Lattice Light Sheet Microscopy (LLS)

Lattice Light Sheet Microscopy (LLS)

Lattice Light Sheet Microscopy, developed by Nobel Prize Winner Eric Betzig, is a relatively new technique, having been developed only in the past five years. It allows long timescale imaging of dynamic biological processes in 3D. It is unique among microscopy techniques in that there is little damage to the sample, meaning living organisms can be imaged in real time. With other technologies, the sample is damaged, and long videos cannot be recorded.

LLS works by using thin sheets of light to illuminate the sample, which can be living tissue, cells, or organisms. The technique is less damaging to live samples and enables the longer acquisition time (hours instead of seconds or minutes). The use of the sheet of light enables faster data acquisition as compared to more traditional methods such as confocal microscopy. By operating at a higher acquisition rate, researchers can visualize previously unseen biological processes in three dimensions.

Like CryoEM, LLS generates TBs of data per day from each instrument. Currently, data output is comparable to or exceeds that of CryoEM, and it can be expected to grow as the technology improves, and more organizations deploy microscopes. Lattice Light Sheet requires GPUs and high-performance storage to analyze the datasets in a timely manner. Like the other techniques mentioned, the improvement in scientific resolution, in this case, length of time and data acquisition rate, contributes to the massive data output sizes.

6 Lattice light-sheet examples. As light planes move through the specimen, 3D images are generated.

Image Source: CBMF Harvard Medical School

-

Infrastructure Considerations for Future Image Analysis

Infrastructure Considerations for Future Image Analysis

The infrastructure designs currently implemented for NGS will not meet image analysis needs and mixed workload pipelines. Storage is central to image analysis workflows, and it must have the capacity to meet the data volumes, and the performance to meet changing workloads. Previously, large amounts of medium performance storage and CPU-only nodes connected at 1G or 10G supplied adequate performance for genomics pipelines. With the growth of image generating devices, storage and compute requirements are far more demanding. Multicore nodes, as well as GPU nodes, are required. Existing 10G networking will not meet the bandwidth needed to get these larger datasets to GPUs or multicore servers. Ideally, an image analysis-ready infrastructure will need high-capacity, high-performance storage, and GPU nodes connected to storage at 25G or better.

Demands on infrastructure will grow with scientific output. Research IT staff will need to spend more time supporting researchers and deploying experimental technologies than ever before. Storage, central to image analysis workflows, must be stable and reliable in addition to high-performance. Furthermore, research IT staff will increasingly have less time to fight fires or get involved in complicated configurations of systems, because their skills will be needed to support researchers in a greater capacity. The growing sophistication of research pipelines will require increased contributions from IT staff, and IT will transition from their infrastructure support role to a partner in accelerating discovery.

-

Artificial Intelligence and Machine Learning in Image Analysis

Artificial Intelligence and Machine Learning in Image Analysis

Another factor driving infrastructure demands is the growing use of artificial intelligence (AI) and machine learning (ML) for image analysis.

The rate at which images are generated far exceeds the ability for humans to analyze manually. Great potential exists for AI/ML to accelerate research in image analysis. However, two main challenges exist.

- Image files, especially legacy data, are spread across disparate storage platforms with limited or no file management in place. Naming conventions and standardized directory structures rarely exist within organizations, and almost never exist externally.

- Some research areas, such as CryoEM, are only now generating enough quality datasets to use for training models. Previously, there simply was not sufficient data to enable algorithm development. The growth in datasets has produced some early wins (e.g., in the particle picking step of the processing pipeline) that have resulted in software performing similar human manual analysis.

Unfortunately, until major progress can be made solving the data management challenges surrounding Life Sciences image files, large-scale breakthroughs will be few and far between. Organizations that immediately start managing their data as they generate it will be much better positioned to take advantage of AI/ML. As this data is created, it is important to have an AI/ML-ready infrastructure is in place to handle the analysis. What’s needed is a high-performance infrastructure with a parallel file system that can saturate GPUs to efficiently and quickly run image-related Life Sciences AI/ML workflows.

-

COVID-19 and CryoEM

COVID-19 and CryoEM

It is easy to get caught up in the technical details of emerging technologies. Often, the research that accompanies these techniques can appear to be esoteric with no practical application. However, as an example, CryoEM has had major success in helping the global effort against COVID-19. In March of 2020, researchers were able to use CryoEM to visualize the structure of the 2019-nCoV trimeric spike glycoprotein. The structure was rapidly determined in a biologically-relevant state at 3.5 Angstroms of resolution, which is comparable to X-ray crystallography techniques.

This protein structure of the virus is a key target for vaccines, drugs, antibodies, diagnostics, and for contributing to our understanding of infection. Now that this structure has been determined, it can be used to guide therapeutic efforts going forward, as referenced in an article titled Cryo-EM structure of the 2019-nCoV spike in the prefusion conformation in the March 2020 issue of Science.

-

The Way Forward for Image Analysis

The Way Forward for Image Analysis

The IT infrastructure designs used for the past ten years to meet NGS needs will not meet the current and future analysis requirements of image analysis in the Life Sciences. Organizations that were used to genomics-only workloads will soon be challenged with analysis related to more recently adopted imaging technologies and the resulting increases in data sizes and volumes. These mixed workloads will stress storage infrastructure in unforeseen ways. As organizations plan for the changing landscape of research pipelines, they must ensure a data storage foundation that delivers high performance in a reliable, scalable and adaptable way. In doing so, IT staff can free themselves from the administrative burden of storage systems and move towards becoming a partner in scientific discovery.